📚 Deep Research & AI in Education: A Game-Changer?

Explore how Deep Research is transforming how we conduct academic research

What if you could generate an entire research paper in minutes at the level of a PhD student? AI tools are now making this possible with a new feature called Deep Research.

Unlike a typical response on AI search tools, the Deep Research feature takes a far more thorough approach. It pulls from dozens of sources, analyzes them in detail, and delivers an in-depth report within minutes. It takes a bit longer to generate results, but in return, you get a report with a much deeper analysis and an explanation of its reasoning process, rather than a simple chat output.

This has the potential to be a game-changer for students and researchers. But what does it mean for education? Will it help students learn better, or will it negatively impact students’ critical thinking skills? In this edition, we’ll take a closer look at what Deep Research tools can do, how they work, and some of their limitations.

Here is an overview of today’s newsletter:

Overview of the Deep Research feature across three major AI models

The latest stats on AI perspectives among students, educators, and parents

Interview with a graduate student researcher at Stanford investigating knowledge-building interactions between students and LLMs

Results from the most comprehensive and large-scale global survey of 23,218 higher education students from 109 countries on their perceptions of ChatGPT

🚀 Practical AI Usage and Policies

Deep Research is becoming a key feature in major AI tools, with OpenAI, Perplexity, and Google Gemini all recently rolling out their versions of this feature. The feature works similarly across all three models. Instead of simply summarizing texts, they pull information from a wide range of sources, analyzing and reasoning through each of them, before outputting a comprehensive report that engages deeply with the material.

However, they also have their limitations. They sometimes hallucinate facts and are restricted to relying on publicly available sources and research papers, excluding paywalled content. Also, despite their name, their analysis is not as deep as that of a true expert but is more comparable to the level of a first-year PhD student.

Below is an overview of the Deep Research feature across these three models. Try them out for yourself and let us know your thoughts in the comments below!

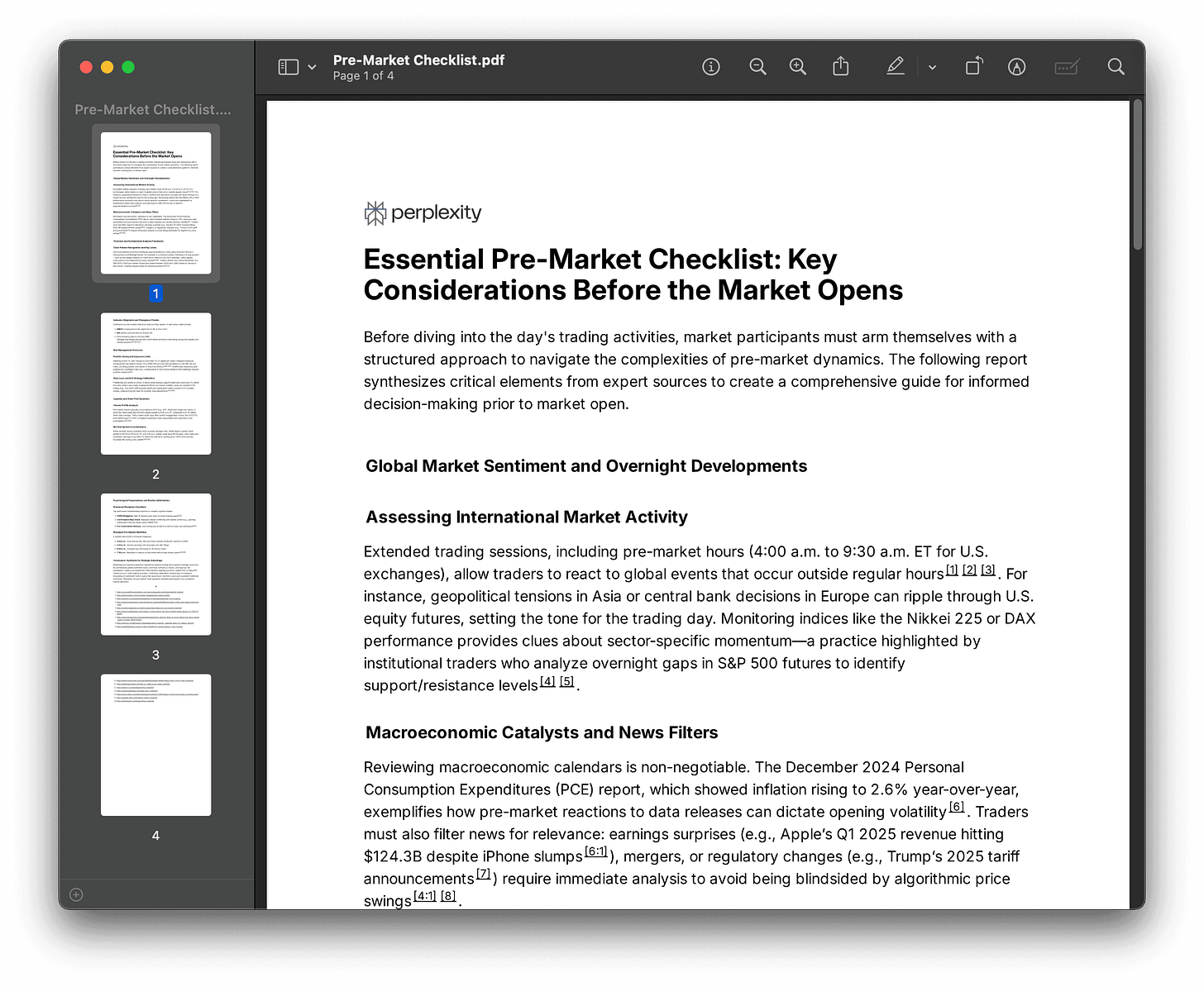

Perplexity Deep Research

Below is an example report generated by Perplexity:

You can try this feature out for free by going to perplexity.ai and selecting “Deep Research” in the search box prior to submitting your query.

OpenAI Deep Research

As shown in the video above, the Deep Research feature can be particularly useful in the field of consulting, helping consultants gain expert-level knowledge across a wide range of topics. This feature can also benefit students by providing a deeper understanding of various research areas before they dive into their own experiments and analyses.

Here is a short guide demonstrating how to use Open AI Deep Research. This feature is currently only available to Plus, Team, Enterprise, and Edu users.

Gemini Deep Research

Google released its Deep Research feature back in December of last year and made it available to all Gemini Advanced users. One of the benefits of Gemini Deep Research is that you can export the report directly to Google Docs and continue building off your research on the document. Recently, Google has made this feature more accessible on the go by integrating it on their Gemini mobile app and on Pixel.

While Deep Research can help students quickly compile information and sources, it is also important for students to develop the skills needed to conduct research on their own. Research empowers students to engage with diverse ideas and perspectives, apply critical thinking and analytical skills, and synthesize their own insights to develop well-informed conclusions.

If you're interested in learning more about the Deep Research feature and want a deeper look at its outputs, limitations, and comparisons across models, we recommend checking out Leon Furze’s blog and Ethan Mollick’s Substack on Deep Research below:

📊 The Latest Stats on AI Perspectives

OpenAI recently released its report, Building an AI-Ready Workforce: A Look at College Student ChatGPT Adoption in the US. The report examines student adoption rates of ChatGPT across various states and explores how students are using the tool in different contexts. Below is a snapshot of one of their survey results highlighting AI tool use for 18 to 24-year-old students:

Adobe and Advanis' Creativity with AI in Education 2025 Report highlights educators’ perspectives on integrating generative AI into classrooms to enhance student creativity, academic achievement, career readiness, and personal growth. According to their survey of over 2,800 educators in the US and UK, they found that 91% observed enhanced learning when students used creative AI. Additionally, 82% reported that activities involving creative AI fostered student well-being and engagement in the classroom.

Eschelon Insights provides a parental perspective on Generative AI through the National Parents Union December 2024 Survey, exploring the role of AI in K-12 learning. Based on the results, parents seem to have a neutral opinion on AI usage, with 49% believing AI's benefits and downsides are equally balanced. Furthermore, only 54% of parents feel their children are adequately prepared to use AI effectively in the classroom.

📣 Student Voices and Use Cases

Mayank Sharma is a first-year Masters student in Stanford’s Education Data Science program and a Graduate Student Researcher at the Stanford Institute for Human-Centered Artificial Intelligence (HAI). In the following, we present select highlights from these conversations, which have been slightly edited for enhanced clarity and precision:

Q: I recently heard that you started a new position at the Institute for Human-Centered AI, where you're building benchmarks for conversations between LLMs and students. Could you share a bit about what you've been working on?

There is currently research being done to identify mechanisms in which knowledge-building happens between students and LLMs. For instance, when I go and ask an LLM to help me with an assignment question that I might have, I want to know if it respects my wish that I don't really want the answer. Does it spit out the answer already or does it sort of nudge me and probe me towards an answer? Is it culturally responsive to my needs? Is there like some sort of accountability between me and the LLM? Is it helping my cognitive sort of development in general? Or is it just helping me to pass an exam or pass an assignment?

What I am trying to do is build off of a theoretical sort of framework that researchers are putting together at Stanford’s Graduate School of Education. I am trying to understand if we can simulate conversation between LLMs and students in a way that respects certain dimensions.

We're particularly looking at middle school environmental sciences. What I'm doing is generating a couple of human-like written prompts and scenarios, called seeds. Eventually, the plan is to have LLMs generate responses to them and then have a teacher annotate them on how good the LLM was at maintaining cultural responsiveness or maintaining anonymity or accountability. The larger idea is to create a benchmarking system with the collected data, serving as a resource for future education-specific LLMs to train and fine-tune on, defining what a knowledge-building conversation between students and LLMs should look like.

Q: What is the current state of research regarding LLMs and their incorporation into the learning process?

Honestly, there has been conflicting research that has been coming out. Some people say that it's taking away the productive struggle that happens in learning. There's an article that came out by Stanford graduate students particularly on this subject, where there is some amount of productive struggle which is very important for students to overcome to be able to learn and retain knowledge. This has sort of gone away because of AI since you can just simply ask it questions and it will give you the answers.

There is also a lot of research that's going on with teachers, particularly on how it can automate tasks for them, help them create essay prompts, and automate time-consuming bits of a teacher's job. I've read a couple of papers on essay grading for teachers and there have been mixed reports of that. Sometimes it does very well with chain of thought prompting. It does very well when it's provided with a rubric that is developed by a teacher as opposed to just doing it solo without any sort of context.

But there is no sort of black-and-white consensus or judgment about whether AI is good or bad for education. It's very domain-specific; it's very content-specific; it's very use-case-specific. The more refined your prompts, the more detailed your instructions are to the AI, and then it can do a good job. But there's still that human element that needs to be present. The human has to develop a rubric for them to be able to evaluate it on. It's not like it can do the job on its own.

Q: Reflecting on your experience in graduate school, how are you incorporating AI into your learning? Where does it work? Where does it not work?

One thing I personally struggle with is remembering matrix algebra identities and properties in math-heavy courses. Searching for them online can be time-consuming since it’s hard to find the exact property or identity, especially with so many variations. LLMs have been incredibly helpful for me here. Whenever I can’t understand how a linear algebra problem moves from one step to the next due to an identity, the LLM explains it clearly and fills in those gaps.

Another area is using LLMs as thought partners. I sometimes struggle with generating creative ideas. Even though LLMs are limited by existing knowledge, they’re great starting points to help me break through creative blocks when brainstorming for a project.

Lastly, I’ve found LLMs helpful for debugging code. While they sometimes get things wrong, especially with complex code, they’re great for simpler tasks, like plotting, where I might forget specific parameters. Overall, LLMs have been extremely helpful in these areas.

📝 Latest Research in AI + Education

PLOS One

Higher education students’ perceptions of ChatGPT: A global study of early reactions ↗️

The study presents a large-scale global survey of 23,218 higher education students from 109 countries, exploring their early perceptions of ChatGPT. It examines their usage patterns, perceived benefits, ethical concerns, and the impact on learning and career development.

Students primarily use ChatGPT for brainstorming, summarizing texts, and finding research articles. It is also seen as useful for simplifying complex concepts but less reliable for providing accurate information or fully supporting classroom learning.

A significant portion of students express concerns about ChatGPT's role in academic integrity, fearing increased plagiarism and misuse. They emphasize the necessity of AI regulations at all levels to ensure responsible usage.

While ChatGPT is perceived as beneficial for AI literacy, digital communication, and content creation, students find it less useful for interpersonal communication, decision-making, and critical thinking. Many believe it will increase demand for AI-related skills and facilitate remote work.

Students generally have a positive emotional response to ChatGPT, with curiosity and calmness being the most common emotions. The study suggests that universities should develop clear policies on AI usage, integrating ChatGPT into curricula while addressing ethical concerns and digital literacy.

Ravšelj D, Keržič D, Tomaževič N, Umek L, Brezovar N, A. Iahad N, et al. (2025) Higher education students’ perceptions of ChatGPT: A global study of early reactions. PLoS ONE 20(2): e0315011. https://doi.org/10.1371/journal.pone.0315011Kitco Design, Google, UMass Amherst

A dataset of 55 algebra misconceptions and 220 diagnostic examples was created to support AI-driven educational platforms in identifying and addressing student errors.

GPT-4 Turbo was tested on the dataset, achieving up to 83.9% accuracy when constrained by topic, though it struggled with ratios and proportional thinking.

80% of surveyed educators recognized the misconceptions in their classrooms, and most expressed interest in using AI to diagnose student errors and train teachers.

AI models perform better when trained within specific topics, and misconception relationships suggest hierarchical dependencies that influence student learning.

The dataset can be used for diagnostic tools, AI-assisted tutoring, and teacher training, with future improvements focused on multimodal AI and educator feedback.

Otero, Nancy & Druga, Stefania & Lan, Andrew. (2024). A Benchmark for Math Misconceptions: Bridging Gaps in Middle School Algebra with AI-Supported Instruction. 10.21203/rs.3.rs-5306778/v1. 📰 In the News

Yahoo! Finance / Poets & Quants

Shaping The Future Of Business Education: AI Integration At William & Mary ↗️

Key takeaways:

The Raymond A. Mason School of Business at William & Mary is incorporating AI into its curriculum to ensure students develop the skills needed to thrive in a rapidly evolving digital economy.

AI-enabled tools are removing technical barriers, allowing students from all disciplines to engage with AI-driven insights, strategic thinking, and problem-solving without requiring advanced coding expertise.

Faculty are integrating AI into coursework through hands-on applications, such as marketing strategy, business analytics, and leadership development, as well as leveraging tools like Microsoft Copilot and AI-driven simulations.

Research and classroom discussions focus on AI’s role in academia and industry, exploring critical topics such as transparency, responsible decision-making, and academic integrity.

The school takes an interdisciplinary approach to AI education by integrating AI across business disciplines and partnering with industry leaders to prepare students for AI-driven decision-making in the real world, including hosting an “AI in Business Education Summit” in 2025.

Pennsylvania Capital-Star

State rejects application for cyber charter school with AI teacher and two hours of daily class ↗️

Key takeaways:

The Pennsylvania Department of Education recently denied Unbound Academy’s application, citing concerns about its AI-driven instructional model, lack of alignment with academic standards, and multiple deficiencies in its proposal.

The proposed cyber charter school planned to use AI tutors with human staff as "guides," but critics, including teachers' unions, argued that AI cannot replace human instruction.

The rejection cited unrealistic enrollment projections, lack of clarity on curriculum, budgeting concerns, and insufficient support for special education students.

Lawmakers and education advocates opposed the school, with a state senator planning to introduce a moratorium on new cyber charter schools, arguing they enable profiteering off taxpayers.

Pennsylvania has 14 cyber charter schools, which have grown since COVID-19. However, concerns persist over their funding, academic performance, and spending practices, with a recent report highlighting questionable expenditures at an existing online charter school.

And that’s a wrap for this week’s newsletter! If you enjoyed our newsletter and found it helpful, please consider sharing this free resource with your colleagues, educators, administrators, and more.