👩🎓AI + Students: What’s Changing

Explore how AI startups are reshaping student learning today and what this means for the future of the classroom.

OpenAI has just made its premium ChatGPT Plus subscription available for free to all college students in the U.S. and Canada through May, just in time for final exams! This gives students access to advanced features like O3-mini reasoning model, image generation, voice interaction, and powerful research tools, all designed to help them tackle their studies more effectively.

This move intensifies competition with Anthropic, which recently launched Claude for Education, a tailored version of Claude aimed at higher education institutions. It features a “learning mode” designed to help students learn by guiding their reasoning rather than simply providing answers.

The race is on as AI startups are rapidly reshaping the education landscape, changing the way students learn and work. In this edition, we’ll take a behind-the-scenes look at these edtech startups and explore how students feel about using AI tools.

Here is an overview of today’s newsletter:

Key takeaways from edtech leaders in our recent AI x Education webinar

Insights on student perspectives and real-world use cases of AI

Exploring AI’s potential to enhance student collaboration and group work

How AI edtech platforms are making learning more accessible around the world

🚀 Practical AI Usage and Policies

⭐️ Insights from our AI x Education Webinar Series

At our recent AI x Education webinar, AI in the Classroom: Practical Insights from Leading Edtech Innovators, Bill Salak (CTO/COO of Brainly) and France Hoang (co-founder/CEO of Boodlebox) shared insights from their experiences building AI-powered education platforms that serve hundreds of thousands of students worldwide. Check out the webinar recording below!

TLDR

AI alone isn't enough - successful educational technology must address genuine friction points in today's learning landscape while complementing traditional education methods.

Educational assessment needs to shift focus from products (essays, projects) to processes, as AI can easily generate the former without indicating true student knowledge.

Future-ready education requires developing domain expertise, responsible AI usage skills, and strengthened human capabilities like collaboration and critical thinking.

⭐️ Latest Reports on AI

Anthropic Education Report: How University Students Use Claude

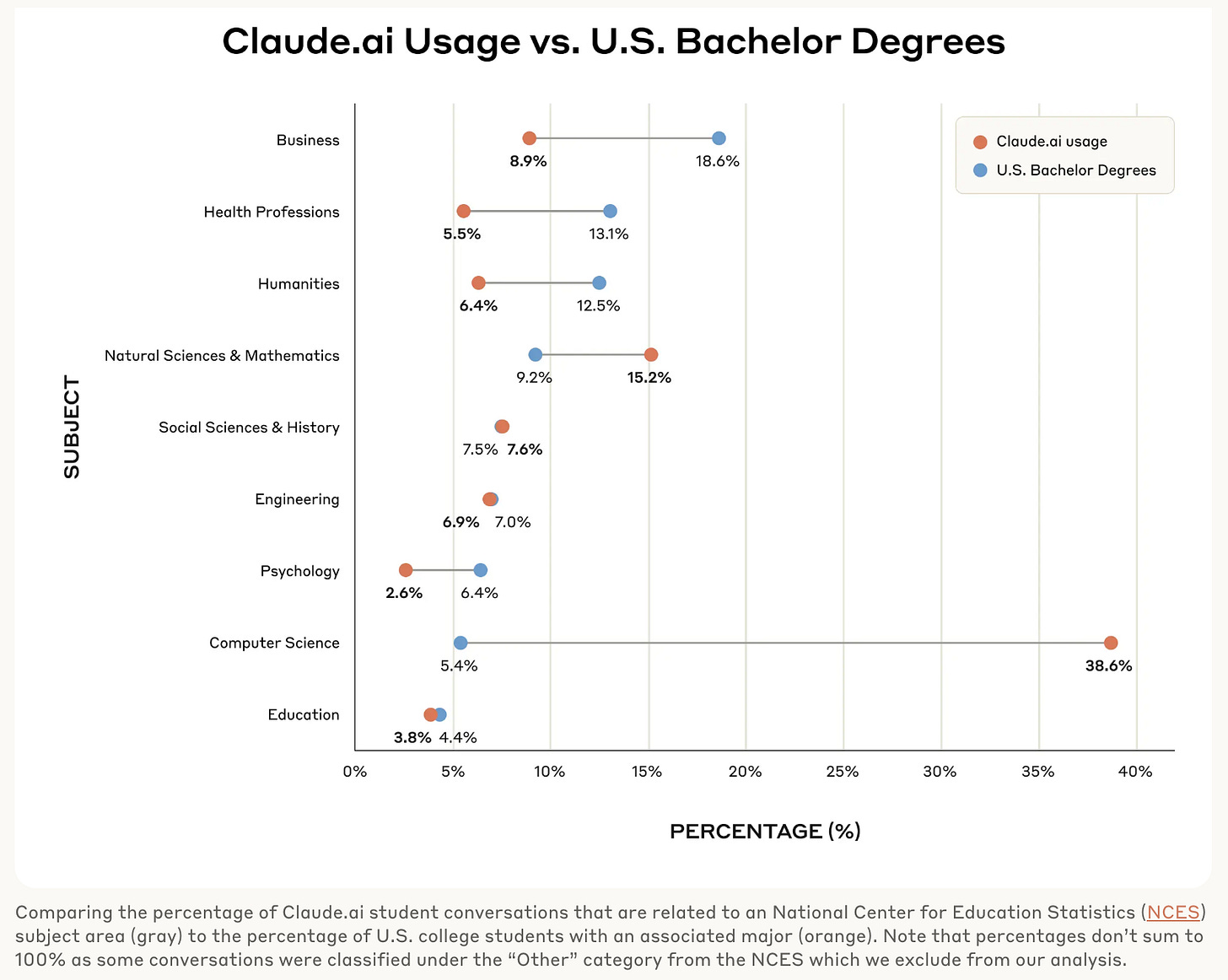

Anthropic recently reviewed over a million anonymized student interactions on their LLM, Claude.ai, a leading AI tool among students. The analysis revealed that STEM students, especially those studying Computer Science, are among the earliest adopters. Despite making up just 5.4% of U.S. degrees, Computer Science students accounted for 36.8% of all conversations. Meanwhile, students in Business, Health, and the Humanities are adopting AI tools at noticeably lower rates compared to their overall enrollment.

Below is a breakdown of how students are delegating cognitive responsibilities to AI systems on Claude, using Bloom’s Taxonomy as a framework. For more insights, check out the full report here.

Voices of Gen Z: How American Youth View and Use Artificial Intelligence

The Walton Family Foundation, GSV Ventures, and Gallup recently published a report featuring the latest survey results on Gen Z’s view and usage of AI in their lives. Many Gen Z students use AI tools regularly, with roughly 47% reporting using AI tools on a weekly basis. However, they are also anxious about what this technology could mean for the future and express uncertainties about the appropriate usage of AI.

Many Gen Z students also report wanting stronger guidance and policies regarding when and how to use AI. To learn more about student perspectives on AI, check out the full report here.

⭐️ Additional Resources

Khan Academy’s Framework for Responsible AI in Education - Explore the core principles behind Khan Academy’s new Responsible AI Framework and how they apply it in their product development processes to create meaningful, safe, and ethical learning experiences.

The 2025 AI Index Report - Stanford’s Institute for Human-Centered Artificial Intelligence (HAI) recently released a 450+ page report detailing major developments in AI, from technical breakthroughs and benchmark progress to investment trends, education shifts, policy updates, and more. For a quick visualization of this data, feel free to check out this resource, AI Index 2025: State of AI in 10 Charts, derived from the AI Index Report.

Educate AI Magazine - This magazine presents first-hand experiences of the benefits and challenges of using AI to enhance, transform, and innovate. The latest issue features interviews with edtech leaders and offers a closer look at the current role of AI in education.

📝 Latest Research in AI + Education

🤨 How might AI impact group projects and student collaboration?

This working paper presents a field experiment conducted at Procter & Gamble to examine the impact of generative AI on teamwork. The study explored how AI can function as a “cybernetic teammate,” influencing team performance, expertise sharing, and social engagement among professionals working on real product innovation challenges.

The findings revealed that both individuals and teams using AI were more likely to generate solutions ranked in the top 10% of all submissions. Participants reported more positive emotions when using AI and found that it significantly reduced the time spent working on solutions. Additionally, AI helped break down functional silos, with professionals producing more balanced solutions that integrated both technical and commercial aspects, regardless of their background (R&D or Commercial).

Below are several ways these findings could be applied in the classroom:

The increased likelihood of generating top-quality solutions when AI is involved highlights its potential to elevate the outcomes of student projects.

AI's ability to help bridge expertise gaps highlights how AI can assist students with diverse skill sets in contributing effectively to projects.

The positive emotional responses associated with AI use, such as increased enthusiasm, suggest that AI tools could enhance student engagement in learning activities.

The finding that AI can foster more balanced collaboration in teams by reducing dominance effects suggests that AI could help ensure more equitable contributions in group work.

Even with high retention of AI-generated content, human input remained crucial in shaping the final solutions, emphasizing the continued importance of critical thinking and adaptation by students.

The time efficiency afforded by AI could allow students to dedicate more time to deeper learning and exploration within their projects.

Dell'Acqua, Fabrizio and Ayoubi, Charles and Lifshitz-Assaf, Hila and Sadun, Raffaella and Mollick, Ethan R. and Mollick, Lilach and Han, Yi and Goldman, Jeff and Nair, Hari and Taub, Stew and Lakhani, Karim R., The Cybernetic Teammate: A Field Experiment on Generative AI Reshaping Teamwork and Expertise (March 28, 2025). Harvard Business School Strategy Unit Working Paper No. 25-043, Harvard Business School Technology & Operations Mgt. Unit Working Paper No. 25-043, Harvard Business Working Paper No. No. 25-043, Available at SSRN: https://ssrn.com/abstract=5188231 or http://dx.doi.org/10.2139/ssrn.5188231🤨 I’ve been hearing about Claude for Education. How do LLMs like Claude 3.5 Haiku work?

→ On the Biology of a Large Language Model (Anthropic)

This paper from Anthropic offers a behind-the-scenes look at how their large language models work. While the paper is quite technical, it includes helpful visualizations with interactive elements like the one below to make the concepts more accessible!

Lindsey, et al., "On the Biology of a Large Language Model", Transformer Circuits, 2025.📰 In the News

Forbes

How An AI Tutor Could Level The Playing Field For Students Worldwide ↗️

Key takeaways:

AI-powered edtech startup, SigIQ.ai recently received $9.5 million in seed funding to develop their AI tutor designed to deliver personalized, one-on-one teaching at a fraction of the cost of a human tutor. Their mission is to make high-quality education more accessible to all.

The company was founded by Dr. Karttikeya Mangalam and Professor Kurt Keutzer, a distinguished professor at the Berkeley AI Research (BAIR) Lab.

The platform follows a structured pedagogical model: it assesses student understanding, pinpoints learning gaps, designs personalized learning strategies, delivers instruction, and tracks progress.

Early results are promising. Students preparing for high-stakes exams in India and the GRE have reported increased study hours and improved performance, suggesting real potential for AI to enhance learning outcomes.

While SigIQ is currently free to use, a future shift to a premium subscription model raises critical questions about AI’s role in education - will it truly democratize access, or introduce new barriers?

Business Wire

Key takeaways:

Chegg launched Solution Scout, a new tool that allows students to compare solutions from multiple language models (like ChatGPT, Google Gemini, and Claude) alongside Chegg’s own proprietary content.

Solution Scout addresses the challenge students face in verifying the accuracy of online solutions, especially those generated by AI.

According to Nathan Schultz, President and CEO of Chegg, Solution Scout aims to provide students with a transparent comparison of solutions so they can quickly assess and understand them, ultimately focusing on learning rather than spending time scouring different AI tools.

📣 Conversations on AI

Learnest is a 501(c)(3) non-profit organization created to promote ethical and responsible AI in education. Learnest was founded by a group of Stanford Graduate of Education students and alumni and is currently run by Richard Tang, Xinman (Yoyo) Liu, Sophie Chen, and Anvit Garg. Hansol Lee and Mike Hardy serve as advisors.

In the following, we present select highlights from our conversation with the team, which have been slightly edited for enhanced clarity and precision:

Q: What inspired you to create Learnest?

People are excited that AI is finally revolutionizing education, but there's also a neglect of its potential limitations, the harm that it could cause in the education space to teachers, to learners, and the long-term consequences. Very few people know what can go wrong, but we see there's too much excitement and enthusiasm in the space at the expense of people's understanding of this downside.

So one of the first motivations is to pause, to go beyond the hype which is commonly seen across the tech and startup world, who have the incentive to put their product to the market as fast as possible. There's always this race-to-the-market sentiment in the startup world, like build the product first and then test it out with actual users before there is concrete evidence obtained through semi-rigorous studies. So that is something that we don't want to see in the education space. That’s the first reason.

The second reason is we observed a huge chasm between the research and academia side of AI and the practice and industry side. So we have many researchers, professors, and theorists working on different theories, frameworks, and guidelines to think about, to assess, and to prevent harm from happening or to just put together some guidance in terms of how AI should be using education.

However, it is not very applicable to practitioners who work or build products on the ground. Oftentimes, we hear too much jargon and buzzwords like “ethics”, “transparency”, “accountability”, “fairness” – abstract words that lack specific, implementation-driven details, which is a similar phenomenon in the tech industry as well. So it almost feels like the researchers are talking among themselves, whereas that knowledge is not effectively shared with the practitioners. So those two reasons combined, and the fact that LLMs are becoming exponentially powerful every month, much faster than most humans can catch up, made us realize that it is a much bigger problem and we need to address them now.

Q: What are some of Learnest’s current initiatives?

This year, we are planning to run a bootcamp for practitioners. We realized we needed something more practical, more hands-on, and to have practitioners realize the real tradeoffs and the ethical dilemmas that they would face in actual design and development of the AI/GenAI education products.

Another goal for this program is to lower the thresholds for people to actually implement these best practices by first acknowledging the many barriers and frictions in place, as well as learning how to advocate for ethical Edtech practices—because they do matter. What does it take to build AI features educators will actually trust and adopt sustainably? When does AI meaningfully improve learning (vs. just looking good on the roadmap)? How do we responsibly communicate the potentials and limitations of Edtech to align expectations and prevent user backlashes? We want practitioners to truly see the value of incorporating ethics at each stage of AI Edtech lifecycle, rather than treating it as an added burden.

Q: Learnest’s description highlights the importance of teaching LLM guardrails and other safety mechanisms. Can you share more about how these technical safeguards are integrated into the curriculum?

We think about the curriculum in two parts: mind and hand. Mind is the theoretical research side of things. Research that critically questions and evaluates AI in education products deserves to be more widely shared and discussed among practitioners.

The second part is the hands, the implementation part. How do we connect the theories and the guidelines with the actual design development of tools? An important part of the bootcamp is to build a product by incorporating what you learn on the theoretical front.

We would cover different topics including frameworks for evaluating machine learning harms, design justice, prompt engineering, RAG, agentic workflows, LLM as evaluators, and safety guardrails, and then also fine-tuning your own large language models and aligning them with educational values.

By the end of the bootcamp, we offer an opportunity for the participants to build a capstone project on top of an existing open-sourced tutor that is produced by one of UC Berkeley's AI and education labs, (https://github.com/CAHLR, led by Professor Zach Pardos). So it is this synergy between theory and practice that gives the participants a lot more room to explore different responsible AI applications in education at the end.

And that’s a wrap for this week’s newsletter! If you enjoyed our newsletter and found it helpful, please consider sharing this free resource with your colleagues, educators, administrators, and more.